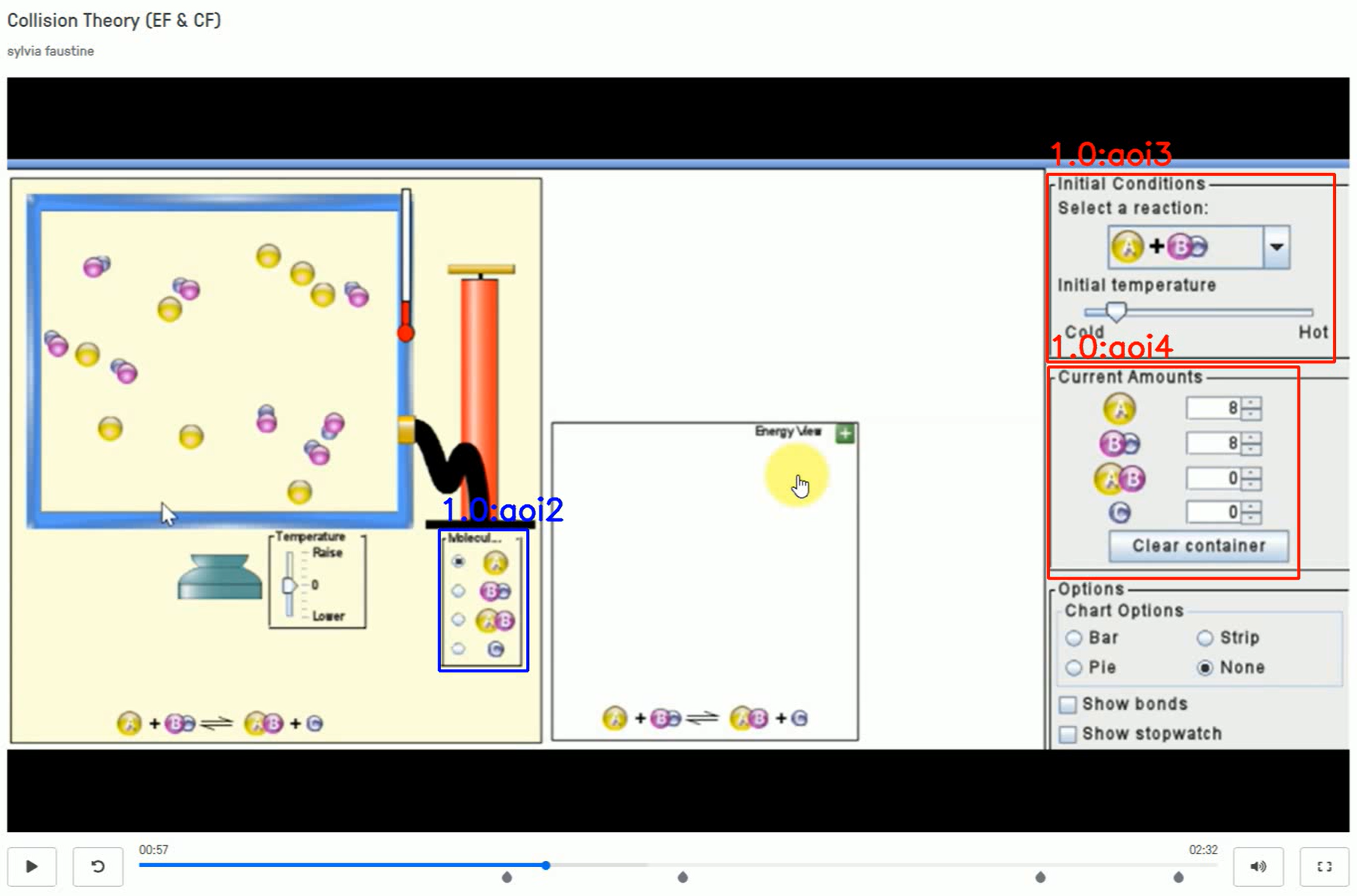

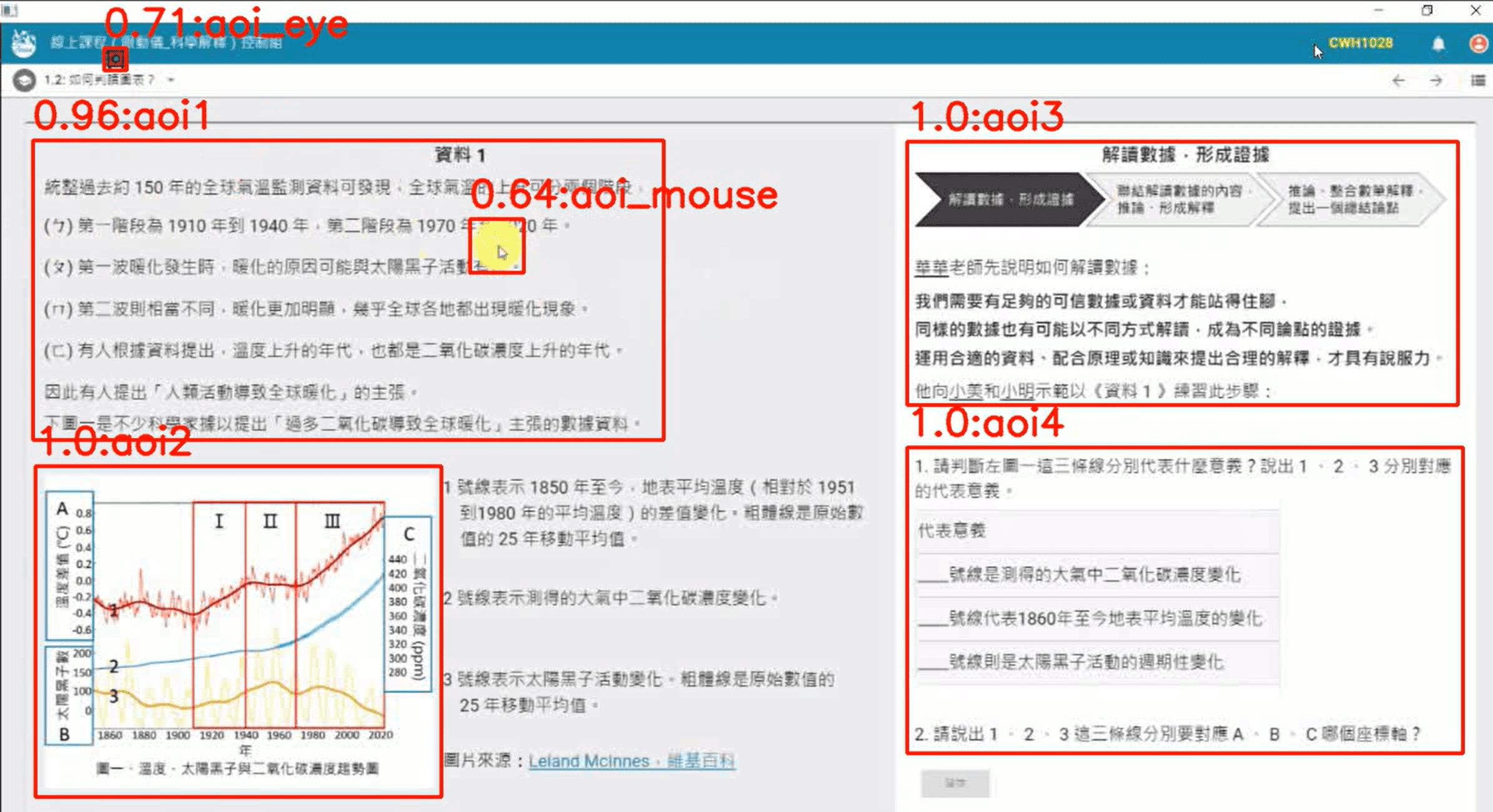

In previous studies analyzing reading processes using an eye tracker, reading materials were typically presented page by page (slide by slide). Each page displayed a portion of the reading information, and specific text or images were marked as Areas of Interest (AOIs). As shown in the example figure, the entire reading process has four pages (Slide 1 to Slide 4). The fourth page has two AOIs: AOI1 is text and AOI2 is an image. After a reader finishes a page, they can move to the next by pressing a specific key or clicking the mouse.

This setup has several advantages:

- It is suitable for problems with a small amount of reading information.

- It is easy to design because the AOIs are in fixed positions on the screen, so subsequent eye-tracking analysis does not need to account for changes in AOI location.

- The analysis is less complex.

However, it also presents some problems:

- The limited space on a single page means that the answer area may need to be placed on a separate page. This increases the difficulty of designing the eye-tracking experiment.

- Some assessment questions, particularly those that are competency-based, require detailed descriptions of a problem context that may exceed one page (as shown in Figure Z). Presenting this content in separate pages affects reading fluency and reduces ecological validity.

- Subsequent eye-tracking analysis requires manually marking the AOI positions at different times (because the AOI positions are not fixed), which is a significant burden for eye-tracking analysis and severely impacts efficiency.

Therefore, to allow readers to solve competency-based problems in a more natural way, we have developed a data analysis method called Dynamic AOI (D-AOI). In simple terms, the eye-tracking experiment uses screen recording to capture eye movement data during the problem-solving process. This allows participants to scroll the screen to read materials that exceed one page, and to zoom in or out on the questions. However, this approach causes the originally fixed AOI positions to become dynamic, meaning the AOI's position changes as the reader's gaze moves.

To address this, this study will develop an Automatic Tagging AOI (AT-AOI) method to improve the efficiency of eye-tracking analysis for competency-based assessments.

Essentially, AT-AOI uses computer vision (Brownlee, 2019) or a Convolutional Neural Networks (CNN) architecture (Chauhan, Ghanshala, & Joshi, 2018) to automatically identify AOIs on the screen. It uses a GPU to enhance processing performance. AT-AOI has two key features:

a. Automatic identification of scaled AOIs. This feature accounts for the fact that a problem solver may need to reference multiple pieces of information and thus may zoom out on an AOI. Conversely, they might also zoom in to view details. To dynamically tag AOIs in a natural reading context, the ability to handle scaled AOIs was included in the development.

b. Identification of AOIs with partial noise. Since the problem-solving process is digital, AOIs may be accompanied by a mouse cursor or a floating spelling window. Therefore, AT-AOI must be able to identify AOIs with this kind of noise.

Impact: This approach significantly reduces the time required to process eye-tracking data, enabling researchers to design more complex eye-tracking materials. It also facilitates deeper exploration of learners’ attention distribution patterns and provides opportunities to retrospectively analyze their problem-solving processes.

圖1. 分頁序列式(slide by slide)素材呈現方式

Examples of Dynamic Area of Interest (AOI) Detection in Science Education Learning Processes

Scientific Explanation (with AOI, mouse, and eye-tracking detection functions)

Video:https://owncloudjohn.ddns.net/owncloud/index.php/s/9BMRob5sB7arI5q

Chemical Molecular Experiment (AOI detection remains effective after zooming)

Video:https://owncloudjohn.ddns.net/owncloud/index.php/s/pszhfBR7aTT85jV